I’ve often encountered the frustrating reality that there are limited resources available when it comes to scaling UniFi controllers. While UniFi’s documentation provides basic guidelines, there’s a noticeable gap in comprehensive advice for those of us managing larger controllers. This article is my contribution to filling that gap, based on my own experiences and hard-learned lessons from scaling a UniFi controller for 12,000+ devices and over 100,000 daily users.

The Importance of Optimizing System Properties

When dealing with a larger deployment, the default settings simply won’t cut it. You need to dive into the system.properties file and start making adjustments. Here are the key configurations I’ve found crucial for scaling effectively.

- unifi.xss (Stack Size Setting):

- Purpose: Controls the maximum stack size for the JVM (Java Virtual Machine) threads.

- My Approach: I’ve found that increasing the stack size is essential when dealing with high concurrent thread usage. Adjusting this setting ensures that each thread has enough memory to handle the heavy load without running into stack overflow issues.

- unifi.xmx (Maximum Heap Size) & unifi.xms (Initial Heap Size):

- Purpose: Defines the amount of memory allocated to the JVM.

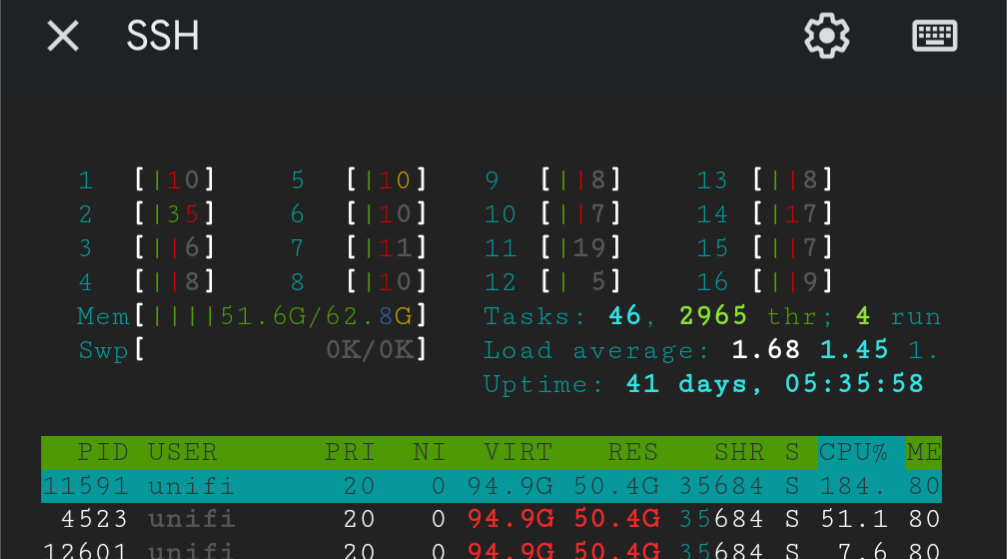

- My Approach: With 64 GB of RAM at my disposal on a GCP E2 instance, I’ve allocated a significant portion of it to the heap size. I started with

unifi.xmsat 16 GB andunifi.xmxat 48 GB, which has proven sufficient to handle peak loads. However, I avoid using the high-performance garbage collector (unifi.G1GC) after encountering issues with errors with my inform stat queue; A/B testing kept leading back to unifi.G1GC.

- inform_stat.num_threads:

- Purpose: Manages the number of threads dedicated to handling incoming statistics from devices.

- My Approach: On a 16-core CPU, increasing the number of threads has been essential to efficiently process data from 12,000+ devices. I’ve fully utilized the available cores to ensure the system remains responsive, even under heavy load.

- inform_stat.thread_queue_size:

- Purpose: Determines the size of the queue that holds incoming statistics before they are processed.

- My Approach: To prevent data loss during peak traffic, I’ve increased the queue size. Balancing this with the thread count has been crucial in managing the sheer volume of traffic my controller handles.

- inform.max_keep_alive_requests:

- Purpose: Controls the maximum number of keep-alive requests the controller will handle before closing the connection.

- My Approach: Given the high-density environment, I’ve increased this value to maintain stable connections across thousands of devices. However, I’ve done so cautiously to avoid resource exhaustion.

Learning from Common Issues: CPU Usage, Memory Allocation, and Dropped Informs

High CPU usage and dropped informs are symptoms that many of us managing large networks face. Here’s how I’ve addressed these challenges:

High CPU Usage: I’ve found that merely increasing CPU resources isn’t always the answer. Instead, optimizing memory allocation through the unifi.xmx and unifi.xms settings has proven more effective. By increasing these values and carefully monitoring CPU usage, I’ve been able to keep my system running smoothly.

Dropped Informs: Increase the number of threads allowed for informs and the inform stat queue and threads.

MongoDB Tuning: Running an external MongoDB cluster has also been a game-changer. It allows me to scale the database independently from the UniFi Network application. I’ve adjusted the db.mongo.connections_per_host setting to increase the number of threads waiting for a Mongo connection, which has improved throughput without overwhelming the CPU. I allow it to autoscale and we tend to be at the M30 tier through a managed MongoDB instance.

Addressing Heartbeat Missed or Slow Provisioning

Heartbeat missed or slow provisioning is another common issue when managing thousands of devices. To combat this, I’ve increased the inform.num_thread and inform.max_keep_alive_requests values in the system.properties file. These adjustments have significantly reduced missed heartbeats and improved overall device stability.

The Importance of Running an External Database

For anyone managing a deployment over ~2000 devices, I can’t stress enough the importance of running an external database. In my setup, offloading the database functions to a separate instance in GCP has reduced the load on my controller server, leading to faster data retrieval and reduced latency. This setup also provides the flexibility to scale the database independently as the network grows.

Conclusion

The lack of resources on scaling UniFi controllers for large networks has pushed me to learn through trial and error. I hope this article can serve as a practical guide for others facing similar challenges. Optimizing your UniFi controller’s system.properties settings is essential for managing a large-scale network effectively. By fine-tuning JVM memory allocation, threading, and queue sizes—and avoiding pitfalls like the garbage collector—you can ensure your network remains stable and responsive, even under heavy loads.

Running the controller on a 16-core, 64 GB RAM E2 instance in GCP with an external Monogo DB provides the necessary resources, but regular monitoring and testing are key to finding the ideal settings as your demands evolve.